Forecasting Your AWS GPU Cloud Spend for Deep Learning

Author: Josh Patterson

Date: 2/24/2021

In this post we look at how to forecast your AWS GPU spend for Deep Learning workloads. To do this we cover the following topics:

- How a typical user consumes GPU hours over the course of a month

- The types of users in an organization for GPUs

- General resource requirements for working with different types of models

- What kinds of GPUs the users typically train models on

- Building a spreadsheet model for how to forecast a monthly and yearly GPU spend

Why is this a compelling exercise?

Organizations are over-budget for their cloud spend by an average of 23-percent.

Beyond that an organization can easily waste 30% of their cloud spend if they aren't careful. This challenge is only getting bigger with organizations expecting to spend 47% more on the cloud in 2021. Organizations are struggling to control costs because they are spending more than they expect in many cases. Although cloud providers offer nice discounts for future commitments, these only work out if you use the resources you commit to in the future, making resource forecasting a useful topic.

In this post we focus on a specific type of workload to give you a better sense of how to more realistically forecast your GPU spend for Deep Learning training. We're going to focus purely on modeling GPU usage in this post. There are other services that a team may use but for the purposes of this analysis we are going to leave those AWS components (outside of SageMaker) out of the cost model for now.

How Does a Data Scientist Use Public Cloud GPU Hours?

The foundation of modeling GPU usage on the cloud is to document how our prototypical data scientist works. To profile a typical organization's data scientist's deep learning habits, we'll consider the following areas:

- What are the types of specific Deep Learning workloads they run and how many resources they require

- Note the different types of public cloud GPU options on AWS

- Understand any other tools on AWS, such as SageMaker, that may be relevant to building our cost model

Resource Requirements for Different Types of Deep Learning Workflows

We'll use Tim Dettmer's blog article on "Which GPU for Deep Learning" as a good starting place for both Deep Learning tasks and memory requirements per task type:

- Using pretrained transformers; training small transformer from scratch >= 11GB

- Training large transformer or convolutional nets in research / production: >= 24 GB

- Prototyping neural networks (either transformer or convolutional nets) >= 10 GB

- Kaggle competitions >= 8 GB

- Applying computer vision >= 10GB

- Neural networks for video: 24 GB

- Reinforcement learning: 10GB (+ a strong deep learning desktop the largest Threadripper or EPYC CPU you can afford).

Many times (especially on twitter) the discussion around Deep Learning only focuses on the academic research aspect of training and development. For our case we're focused on the prototypical Fortune 500 company's data science group, so the workloads are going to be more diverse (read: not everyone is training GPT3).

For the purposes of modeling an enterprise data science group doing deep learning on AWS GPUs, we will split our users into two groups:

- Power Users

- Normal Users

Beyond that, we'll say that some of our "power users" may want to do things such as "train a new transformer" or train convolutional neural networks and that will require more than 24GB of GPU RAM in many cases.

A quick note on the relationship between GPUs and model accuracy below.

"We want to make a clear distinction around what we gain from GPUs, as many times this seems to be conflated in machine learning marketing. We define “predictive accuracy of model” as how accurate the model’s predictions are with respect to the task at hand (and there are multiple ways to rate models). Another facet of model training is “training speed,” which is how long it takes us to train for a number of epochs (passes over an entire input training dataset) or to a specific accuracy/metric. We call out these two specific definitions because we want to make sure you understand:

So GPUs can indirectly help model accuracy, but we still want to set expectations here.."

- GPUs will not make your model more accurate.

- However, GPUs will allow us to train a model faster and find better models (which may be more accurate) faster.

Oreilly's "Kubeflow Operations Guide", Patterson, Katzenellenbogen, Harris (2020)

Now that we've established our groups of users, what kinds of workloads they want to do, and also what some minimum GPU RAM requirements are for those workloads, we can move on and see what kind of AWS instances are offered that will support these workloads.

Understanding the Different Types of Public Cloud GPU Options

In this section we're going to document which GPUs are offered in which instance types on AWS (and which GPUs are not). Using this table of GPUs, we can then compare their RAM and relative power to best determine which are best suited for our enterprise deep learning team's use cases.

There are many different type of GPU chipsets offered by NVIDIA and sometimes it gets confusing as to which GPU is best for your workload. On top of that, AWS offers different GPUs in different instance types, so you need a map of instance type to GPU type as well. Additionally, NVIDIA won't allow certain types of GPUs to be used in a datacenter so this further filters down which GPUs are offered by AWS.

Here we're looking at the enterprise / data center as context for our GPU usage so we'll begin by listing the GPUs you won't find as public cloud instances (due to NVIDIA not rating them as suitable for data center use):

- Titan V

- Titan RTX

- RTX 2080 Ti

- RTX 2080

- M60 (g3 instances)

- K80 (p2 instances)

- T4 (g4 instances)

- V100 (p3 instances)

- A100 (p4 instances)

- M60s are considered virtual workstations for graphics work, should be considered last options for Deep Learning in this group

- The K80 is the p2.xlarge on amazon and is roughly 1/5 as powerful as the V100

- The T4 chipset (G4 AWS Instances) is focused on inference workloads

- The V100 GPU is the same series as in the NVIDIA DGX-1 cluster

- The A100 is only offered (as of today) in an 8xGPU configuration

Our regular users need from 8GB to 16GB of ram for their workloads, as described above, and they would either be using a T4 (g4 instance) or a V100 (16GB GPU RAM) as their main training GPU. A V100 is roughly 3x more powerful than a T4 GPU, for reference. Both p3 and g4 instances allow support for larger models (via more GPU RAM), high throughput, and low-latency access to CUDA. Both of these instances have support for mixed-precision training as well. Given that we can "just wait longer" in many cases for training, the gating mechanism for choosing an instance type ends up being "enough GPU memory for our use case".

Given that most users will go for more power if available, we imagine most of our users will opt for the more powerful p3.2xlarge instance but we'll cap them at a single GPU-profile to keep costs down.

Our power users need a bit more ram so we'll give them the p3.8xlarge instance with 64GB GPU RAM. In the next section we'll show the full table of p3 instances and their pricing. Let's now build up a hypothetical group of data scientists based on our profiles and calculate the team's GPU spend.

Building the GPU Spend Forecast

At this point in our process we know what workloads we are running and what kind of AWS GPU instances we're going to forecast our spend based on, so now we need to come up with a group of data scientists and how often they use GPU hours in the cloud.

Once we have our total monthly hours per group, we can forecast out our yearly AWS GPU spend.

Profiling Our Data Science Team

As we mentioned before we have two groups of users: regular users, and power users. For the purposes of this exercise let's say we have 10 regular users and then 5 power users in our overall data science group.

Model training workflows can take seconds up to days to run, depending on the complexity of the model, the amount of data, and other factors. The regular users' jobs will tend to take less time to train because they will be less complex.

The power users we've queried say some of their GPU-based training jobs can take multiple days, and then other times maybe a few hours. We will set their monthly budget of GPU hours at 900 GPU hours per month as they are training multiple models at the same time in some cases.

We'll set our regular users' GPU hours at 40% of the power users so that has them consuming 360 GPU hours per month.

It's worth mentioning that you are charged for hours the instances are allocated, not hours that we're actually training, so its easy for a team to accrue cloud usage hours faster than they think.

AWS G4 Instance Pricing

In the table below we show AWS' pricing for the g4 instances.

It's worth noting that all single GPU T4-based g4 instances have 16GB of GPU RAM as this is not completely clear from the g4 instance page.

Instance Size |

GPUs - Tesla T4 |

--- |

GPU Memory (GB) |

vCPUs |

Memory (GB) |

Network Bandwidth (Gbps) |

EBS Bandwidth |

On-Demand Price/hr* |

1-yr Reserved Instance Effective Hourly* |

3-yr Reserved Instance Effective Hourly* |

Blended Price Avg(OnDemand and 1yr Reserved) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| g4dn.xlarge | 1 GPU | 16 GiB | 4 | 16 | Up to 25 | Up to 3.5 | $0.526 | $0.316 | $0.210 | $0.421 | |

| g4dn.2xlarge | 1 GPU | 16 GiB | 8 | 32 | Up to 25 | Up to 3.5 | $0.752 | $0.452 | $0.300 | $0.602 | |

| g4dn.4xlarge | 1 GPU | 16 GiB | 16 | 48 | Up to 25 | 4.75 | $1.204 | $0.722 | $0.482 | $0.963 | |

| g4dn.8xlarge | 1 GPU | 16 GiB | 32 | 128 | 50 | 9.5 | $2.176 | $1.306 | $0.870 | $1.741 | |

| g4dn.16xlarge | 1 GPU | 16 GiB | 64 | 256 | 50 | 9.5 | $4.352 | $2.612 | $1.740 | $3.482 | |

| Multi-GPUs: | |||||||||||

| g4dn.12xlarge | 4 GPU | 64 GiB | 48 | 192 | 50 | 9.5 | $3.912 | $2.348 | $1.564 | $3.130 |

Given the limited GPU memory ceiling (16GB outside of the g4dn.12xlarge) and the T4 being 1/3 as powerful as the V100, the g4 ends up being most appropriate for our "Kaggle"-users who are exploring notebooks or doing inference with pre-trained models.

AWS P3 Instance Pricing

In the table below we show AWS' pricing for the p3 instances.

Instance Size | GPUs - Tesla V100 |

GPU Peer to Peer |

GPU Memory (GB) | vCPUs | Memory (GB) | Network Bandwidth | EBS Bandwidth | On-Demand Price/hr* | 1-yr Reserved Instance Effective Hourly* | 3-yr Reserved Instance Effective Hourly* | Blended Price Avg(OnDemand and 1yr Reserved) |

|---|---|---|---|---|---|---|---|---|---|---|---|

p3.2xlarge | 1 | N/A | 16 | 8 | 61 | Up to 10 Gbps | 1.5 Gbps | $3.06 | $1.99 | $1.05 | $2.53 |

p3.8xlarge | 4 | NVLink | 64 | 32 | 244 | 10 Gbps | 7 Gbps | $12.24 | $7.96 | $4.19 | $10.10 |

p3.16xlarge | 8 | NVLink | 128 | 64 | 488 | 25 Gbps | 14 Gbps | $24.48 | $15.91 | $8.39 | $20.20 |

p3dn.24xlarge | 8 | NVLink | 256 | 96 | 768 | 100 Gbps | 19 Gbps | $31.22 | $18.30 | $9.64 | $24.76 |

We've added a column at the end where we've averaged the price of On-Demand instance pricing and 1-Year Reserved Instances. For this cost model this is the hourly cost we use for instances because we want to account for the fact that some AWS resources will be used by good forecasting and 1-Year Reserved instances at the lower price. However, we also want to account for users who will end up using On-Demand instances, so we average the two prices together.

AWS EC2 and SageMaker

In some cases a data scientist may choose to simply send a container to a cloud instance and run it from the command-line. However, modern machine learning tooling uses dashboards, model metadata tracking, and other libraries.

AWS offers SageMaker as their machine learning platform in this case. AWS SageMaker is a development platform for machine learning practitioners that offers a fully-managed environment for building, training, and deploying machine learning at scale. SageMaker offers a Jupyter Notebook instance that allows the user to train a model and further use SageMaker’s API to drive other AWS services for tasks such as model deployment or hyperparameter tuning. All of the services are available via a high-level Python API in SageMaker.

Under the hood SageMaker uses Docker images and S3 storage for training and model inference. You can also provide a Docker image to SageMaker containing your machine learning training code in any framework or language you want to use.

AWS does not provide a clear pricing model for SageMaker as compared to traditional EC2 instances, so this makes it harder to forecast for pricing. For the purposes of this document and pricing model we assume SageMaker introduces a 40% increase in cost compared to running a regular EC2 instance.

At this point we have enough information to build our GPU cost forecast, so let's get to it.

Calculating the Forecast

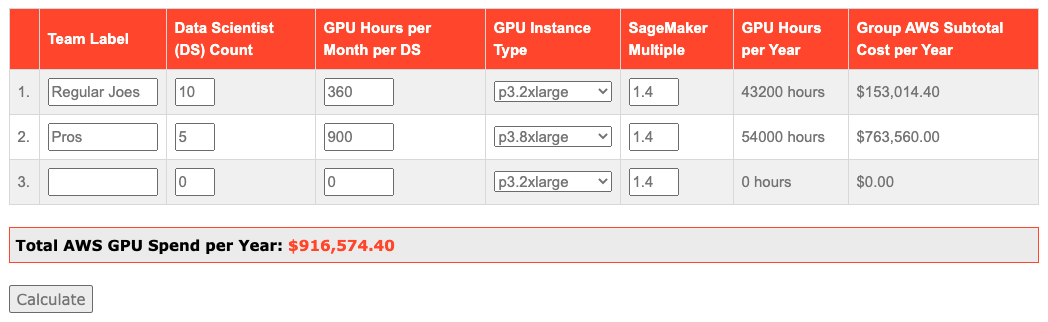

For both groups, we take their projected GPU hours per month and calculate the GPU hours per year (Monthly GPU Hours x 12 months). For the power users we are allocating p3.8xlarge instances and we're using the averaged cost (On Demand and 1-Year Reserved averaged price) to calculate the yearly cost per user. From that we multiply the user cost by 1.4x to get the SageMaker overhead cost per year. We can see the math displayed in the spreadsheet shot below.

Once we have a cost per user per year, we multiply that number by the "Number of Users" in each group and add both of those numbers together.

This give us a yearly AWS cost for GPUs (with SageMaker) of $916,574.00.

If you want to run a quick variation on the above calculation, check out our online GPU cost calculator.

Other Considerations

Now that we've walked through the complete sizing exercise, we'll note a few other relevant topics that can impact your cloud spending decisions.

Some accounting departments will want a certain amount of spend to be a capital expense (CapEx) as opposed to an operational expenses (OpEx). Using cloud services is purely an operational expense, for reference. The reason accounting likes some of the expenses to be CapEx is that they can depreciate the hardware over time and help pay for the cost through reduced taxable income.

Another aspect of using cloud is how the billing is percieved. Previously your company dealt with many different enterprise vendors for different components. Once you started consolidating infrastructure services on public cloud, this began to consolidate cost into a single vendor's line item and accounting will note this increase for a single vendor as anomalous (and in some cases you are using more cloud services than anticipated). Combine this with the fact that you've moved a non-trivial amount of capital expenses over to the operational expenses column and this creates an interesting discussion with accounting. It's not good or bad, but it will change how accounting approaches things and there will likely be discussions to be had. From a pure cost perspective, these are aspects of using cloud services you'll need to consider as you find your way in the public cloud landscape.

The cost discussion is often intense, but many times IT will fire back "but the cloud is easier!"

In many cases this is true. For a real-time application we see the cloud services designed to be redundant, fault tolerant, and geographically-aware out of the box. In other cases, for workloads such as deep learning, many of these benefits are not as useful as you are looking for pure GPU horsepower in many cases.

Lastly we'll reiterate that you are charged for what you forget to turn off, and those GPU hours consumed can quickly pile up from notebook servers that are sitting idle. Another hidden cost is data that is transferred between AWS availability zones, where this is not a cost in an on-premise data center. We call these out to illustrate the need to be aware of the true cost of the agility of public cloud services.

Summary

As an AWS partner, we hope this article was useful for your organization, or just made you more aware about how to think about public cloud cost analysis.

Did you like the article? Do you think we are making a poor estimation somewhere in our calculations? Reach out and tell us.

Free GPU Cloud Spend Analysis

Would you like a customized cloud GPU spend analysis for your organization? Reach out and we’ll provide your company this GPU usage analysis report free of charge.